There, I said it, and as Doja Cat raps, “I said what I said.”

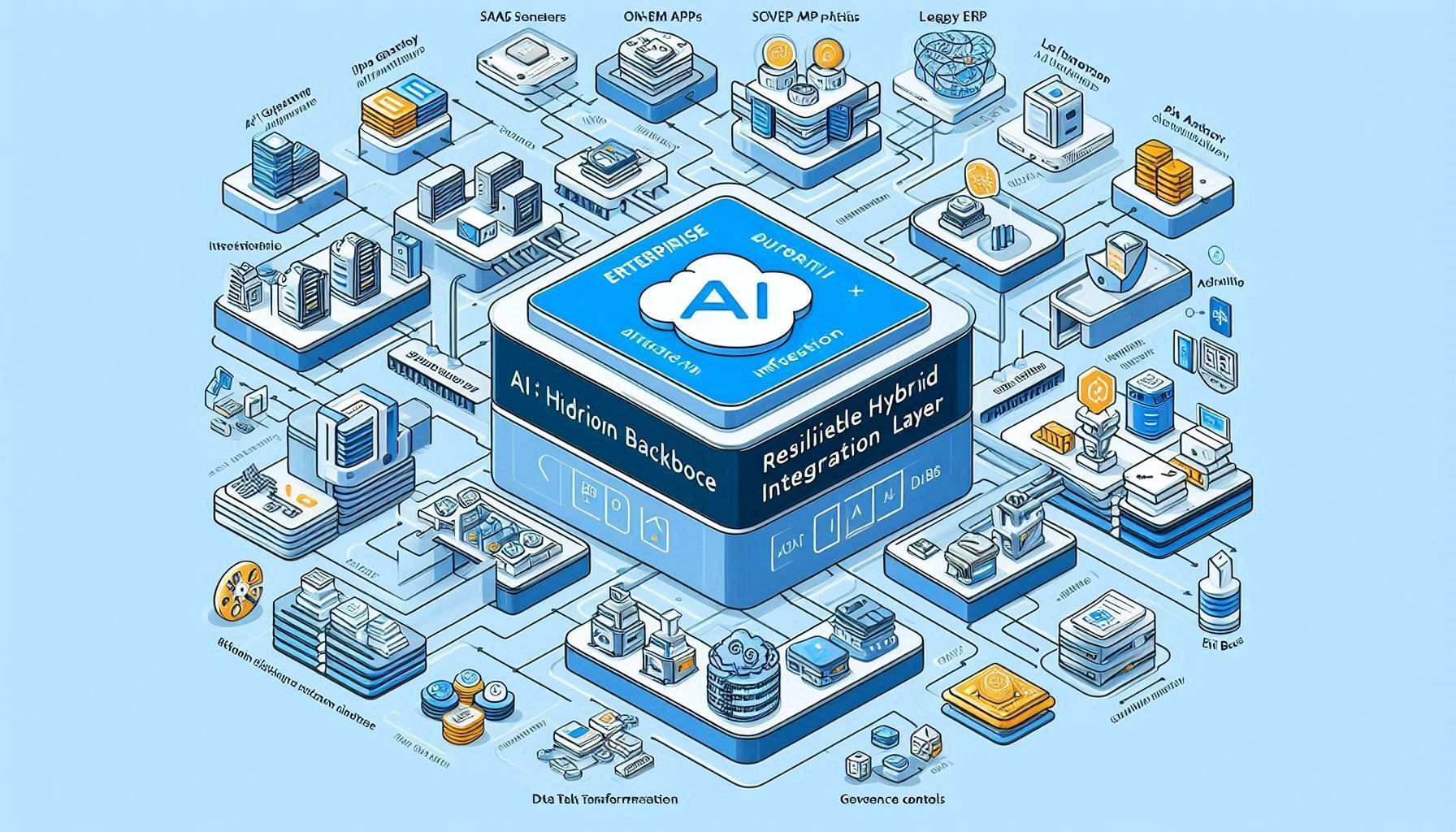

Artificial Intelligence (AI) is not a strategy by itself. In enterprise IT, the true differentiator is how well AI is woven into existing systems, workflows, and infrastructure. Without a robust hybrid integration backbone, even the most advanced model remains a demo.

For enterprise architects, integration architects, and IT executives, the message is clear: AI success is an integration problem first, and a modeling problem second.

AI models capture headlines, but value is unlocked only when data flows seamlessly, securely, and at scale.

It’s not just me saying this. DDN notes that hybrid AI infrastructure is the backbone of the enterprise AI revolution, since workloads must span edge, on-prem, and cloud to balance performance, cost, and governance (DDN ).

Similarly, Palo’s Publishing emphasizes that hybrid cloud strategies for AI workloads are essential for latency management, compliance, and cost optimization (Palo’s Publishing).

The conclusion: if your integration layer is weak, your AI will remain fragile.

Hybrid AI Infrastructure Best Practices

Enterprise AI requires a stronger integration strategy than traditional workloads. Here are proven practices for designing resilient hybrid integration layers:

Data-first design – Map ingestion, transformation, and lineage pipelines before model deployment.

Loose coupling & modular APIs – Prevent lock-in and enable flexible upgrades.

Resilient orchestration & fallback logic – Build retries, circuit breakers, and failover into workflows.

Observability everywhere – Instrument pipelines for latency, errors, and drift.

Governance embedded in flows – Enforce encryption, lineage, and compliance at every boundary.

Hybrid execution awareness – Route workloads dynamically to cloud, on-prem, or edge.

Incremental rollout – Start with a minimal, end-to-end pilot and extend iteratively.

Data Flow Orchestration in Hybrid AI Systems

Enterprise architects must think beyond ETL jobs. AI workloads require real-time orchestration:

Streaming ingestion from IoT devices, SaaS, and APIs.

Routing decisions that place compute where latency, compliance, or cost demands.

Adaptive workflows that recover gracefully when a model or service fails.

This is where integration frameworks for legacy systems play a critical role. Connecting AI models to ERP, CRM, and custom applications is non-negotiable for realizing enterprise value.

Use Case: Predictive Maintenance Across Hybrid Environments

A global manufacturer illustrates the point:

Edge ingestion: Plant sensors stream telemetry through local gateways.

Hybrid routing: Time-sensitive inference runs on-prem; heavy training tasks move to cloud GPUs.

Fallback logic: If AI inference fails, a rules-based system steps in.

Governance controls: Data lineage and access rules apply across environments.

Observability dashboards: IT teams monitor throughput, errors, and system health.

Without resilient hybrid integration layers, this architecture would collapse into brittle scripts and manual patchwork.

Challenges of AI Component Interoperability

Even with good intent, integration projects fail when:

Challenge | Business Impact | Mitigation |

|---|---|---|

Point-to-point sprawl | Expensive maintenance | Use service mesh, API gateways, contracts |

Blind spots in observability | Undiagnosed outages | Implement tracing, logs, and monitoring |

Weak governance enforcement | Compliance risk | Automate policy & lineage enforcement |

Over-optimistic resilience | Service failures ripple across stack | Build retries, fallbacks, and chaos testing |

Legacy lock-in | AI can’t connect to core apps | Deploy lightweight AI integration frameworks for legacy systems |

ROI: Integration as the Multiplier

For IT executives, integration investment delivers measurable ROI:

Faster rollout of new AI services via plug-and-play pipelines.

Lower rework costs when models or data sources change.

Stronger operational resilience, with graceful degradation under failure.

Regulatory assurance from traceability and policy enforcement.

Cost optimization through smart hybrid workload routing.

Integration isn’t overhead. It’s the multiplier that turns AI into enterprise value.

Final Word: Integration Is Your Strategic Moat

Models evolve. Vendors come and go. Data doubles every year. But your integration backbone—if designed for scale, resilience, and governance—will protect your AI investments for the long game.

There, I said it. And as Doja Cat reminds us, “I said what I said.”

Doja’s track Paint the Town Red samples Walk On By—watch the official video here.

👉 Enterprise architects and IT leaders: before you fund the next AI pilot, ask how strong your integration backbone is. If the room goes quiet, start there.